Introduction

The first thing I did when I started programming in Go was begin porting my Windows utilities classes and service frameworks over to Linux. This is what I did when I moved from C++ to C#. Thank goodness, I soon learned about Iron.IO and the services they offered. Then it hit me, if I wanted true scalability, I needed to start building worker tasks that could be queued to run anywhere at any time. It was not about how many machines I needed, it was about how much compute time I needed.

|

| Outcast Marine Forecast |

The freedom that comes with architecting a solution around web services and worker tasks is refreshing. If I need 1,000 instances of a task to run, I can just queue it up. I don’t need to worry about capacity, resources, or any other IT related issues. If my service becomes an instant hit overnight, the architecture is ready, the capacity is available.

My mobile weather application Outcast is a prime example. I currently have a single scheduled task that runs in Iron.IO every 10 minutes. This task updates marine forecast areas for the United States and downloads and parses 472 web pages from the NOAA website. We are about to add Canada and eventually we want to move into Europe and Australia. At that point a single scheduled task is not a scalable or redundant architecture for this process.

Thanks to the Go Client from Iron.IO, I can build a task that wakes up on a schedule and queues up as many marine forecast area worker tasks as needed. I can use this architecture to process each marine forecast area independently, in their own worker task, providing incredible scalability and redundancy. The best part, I don’t have to think about hardware or IT related capacity issues.

Create a Worker Task

Back in September I wrote a post about building and uploading an Iron.IO worker task using Go:

https://www.ardanlabs.com/blog/2013/09/running-go-programs-in-ironworker.html

This task simulated 60 seconds of work and ran experiments to understand some of the capabilities of the worker task container. We are going to use this worker task to demonstrate how to use the Go Client to queue a task. If you want to follow along, go ahead and walk through the post and create the worker task.

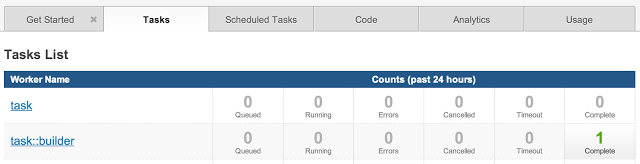

I am going to assume you walked through the post and created the worker called "task" as depicted in the image below:

Download The Go Client

Download the Go Client from Iron.IO:

Now navigate to the examples folder:

The examples leverage the API that can be found here:

http://dev.iron.io/worker/reference/api/

Not all the API calls are represented in these examples, but from these examples the rest of the API can be easily implemented.

In this post we are going to focus on the task API calls. These are API's that you will most likely be able to leverage in your own programs and architectures.

Queue a Task

Open up the queue example from the examples/tasks folder. We will walk through the more important aspects of the code.

In order to queue a task with the Go client, we need to create this document which will be posted with the request:

"tasks": [

{

"code_name": "MyWorker",

"timeout" : 60,

"payload": "{\"x\": \"abc\", \"y\": \"def\"}"

}

]

}

In the case of our worker task, the payload document in Go should look like this:

{

"code_name" : "task",

"timeout" : 120,

"payload" : ""

}]}`

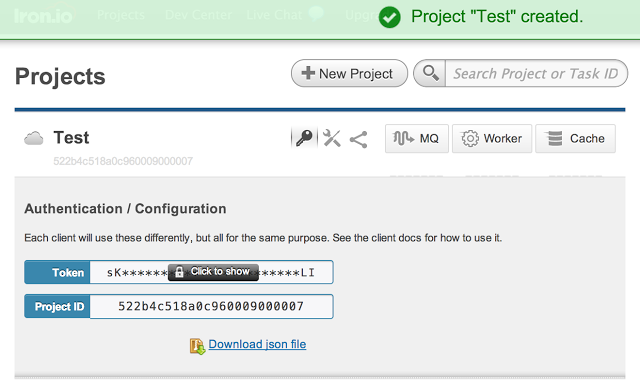

Now let's look at the code that will request our task to be queued. The first thing we need to do is set our project id and token.

config.ProjectId = "your_project_id"

config.Token = "your_token"

As described in the post from September, this information can be found inside our project configuration:

Now we can use the Go Client to build the url and prepare the payload for the request:

postData := bytes.NewBufferString(payload)

Using the url object, we can send the request to Iron.IO and capture the response:

defer resp.Body.Close()

if err != nil {

log.Println(err)

return

}

body, err := ioutil.ReadAll(resp.Body)

if err != nil {

log.Println(err)

return

}

We want to check the response to make sure everything was successful. This is the response we will get back:

"msg": "Queued up",

"tasks": [

{

"id": "4eb1b471cddb136065000010"

}

]

}

To unmarshal the result, we need these data structures:

TaskResponse struct {

Message string `json:"msg"`

Tasks []Task `json:"tasks"`

}

Task struct {

Id string `json:"id"`

}

)

Now let's unmarshal the results:

err = json.Unmarshal(body, taskResponse)

if err != nil {

log.Printf("%v\n", err)

return

}

If we want to use a map instead to reduce the code base, we can do this:

err = json.Unmarshal(body, &results)

if err != nil {

log.Printf("%v\n", err)

return

}

When we run the example code and everything works, we should see the following output:

"msg": Queued up

{

"id": "52b4721726d9410296012cc8",

},

If we navigate to the Iron.IO HUD, we should see the task was queued and completed successfully:

Conclusion

The Go client is doing a lot of the boilerplate work for us behind the scenes. We just need to make sure we have all the configuration parameters that are required. Queuing a task is one of the more complicated API calls. Look at the other examples to see how to get information for the tasks we queue and even get the logs.

Queuing a task like this gives you the flexibility to schedule work on specific intervals or based on events. There are a lot of use cases where different types of web requests could leverage queuing a task. Leveraging this type of architecture provides a nice separation of concerns with scalability and redundancy built in. It keeps our web applications focused and optimized for handling user requests and pushes the asynchronous and background tasks to a cloud environment designed and architected to handle things at scale.

As Outcast grows we will continue to leverage all the services that Iron.IO and the cloud has to offer. There is a lot of data that needs to be downloaded, processing and then delivered to users through the mobile application. By building a scalable architecture today, we can handle what happens tomorrow.